Hi there! I am TEJA

How can I assist you today

The world of artificial intelligence is constantly evolving, and one of the most captivating areas of progress is in the realm of voice technology. Recently, a new contender has emerged, generating significant buzz and excitement within the AI community and beyond: the Sesame voice model, officially known as the Conversational Speech Model (CSM). This technology has rapidly garnered attention for its remarkable ability to produce speech that sounds strikingly human, blurring the lines between artificial and natural communication. Initial reactions have been overwhelmingly positive, with users and experts alike expressing astonishment at the model's naturalness and emotional expressiveness. Some have even noted the difficulty in distinguishing CSM's output from that of a real person, signaling a potential breakthrough in overcoming the long-sought-after "uncanny valley" of artificial speech. This achievement is particularly noteworthy as it promises to make interactions with AI feel less robotic and more intuitive, potentially revolutionizing how we engage with technology.

The pursuit of realistic AI voice is a pivotal milestone in the broader journey of artificial intelligence. For years, the robotic and often monotone nature of AI speech has been a barrier to seamless human-computer interaction. The ability to generate voice that conveys emotion, nuance, and natural conversational flow is crucial for creating truly useful and engaging AI companions. Sesame AI, the team behind this innovation, aims to achieve precisely this. Their mission is centered around creating voice companions that can genuinely enhance daily life, making computers feel more lifelike by enabling them to communicate with humans in a natural and intuitive way, with voice being a central element. The core objective is to attain what they term "voice presence" - a quality that makes spoken interactions feel real, understood, and valued, fostering confidence and trust over time. This blog post will delve into the intricacies of the Sesame voice model, exploring its architecture, key features, performance compared to other models, potential applications, ethical considerations, and the implications of its recent open-source release.

The technology at the heart of the recent excitement is officially named the "Conversational Speech Model," or CSM. This model represents a significant advancement in the field of AI speech synthesis, designed with the explicit goal of achieving real-time, human-like conversation The team at Sesame AI is driven by a clear mission: to develop voice companions that are genuinely useful in the everyday lives of individuals This involves not just the generation of speech, but the creation of AI that can see, hear, and collaborate with humans naturally. A central tenet of their approach is the focus on natural human voice as the primary mode of interaction. The ultimate aim of their research and development efforts is to achieve "voice presence". This concept goes beyond mere clarity of pronunciation; it encompasses the ability of an AI voice to sound natural, believable, and to create a sense of genuine connection and understanding with the user It's about making the interaction feel less like a transaction with a machine and more like a conversation with another intelligent being.

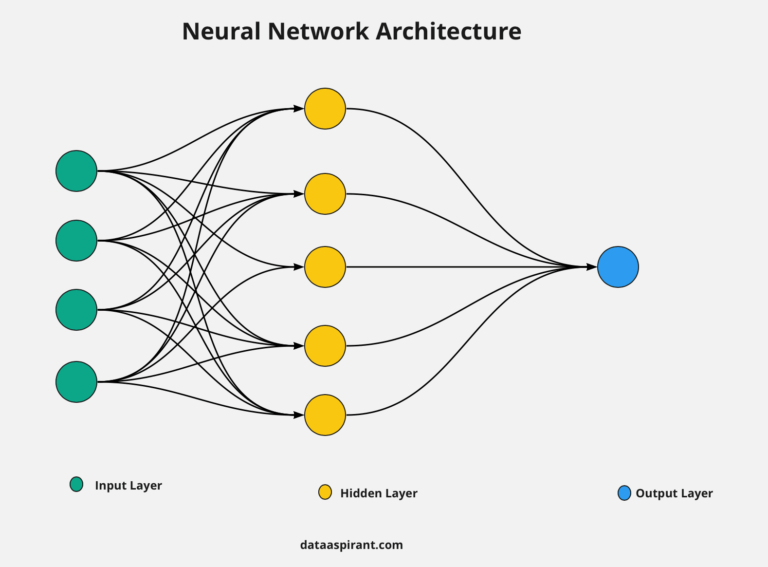

The remarkable naturalness of the Sesame voice model is underpinned by a sophisticated technical architecture that departs from traditional text-to-speech (TTS) methods. A key aspect of CSM is its end-to-end multimodal architecture. Unlike conventional TTS pipelines that first generate text and then synthesize audio as separate steps, CSM processes both text and audio context together within a unified framework. This allows the AI to essentially "think" as it speaks, producing not just words but also the subtle vocal behaviors that convey meaning and emotion. This is achieved through the use of two autoregressive transformer networks working in tandem. A robust backbone processes interleaved text and audio tokens, incorporating the full conversational context, while a dedicated decoder reconstructs high-fidelity audio. This design enables the model to dynamically adjust its output in real-time, modulating tone and pace based on previous dialogue cues.

Another crucial element is the advanced tokenization via Residual Vector Quantization (RVQ) CSM employs a dual-token strategy using RVQ to deliver the fine-grained variations that characterize natural human speech, allowing for dynamic emotional expression that traditional systems often lack. This involves two types of learned tokens: semantic tokens, which capture the linguistic content and high-level speech traits, and acoustic tokens, which preserve detailed voice characteristics like timbre, pitch, and timing. By operating directly on these discrete audio tokens, CSM can generate speech without an intermediate text-only step, potentially contributing to its increased expressivity.

Furthermore, CSM incorporates context-aware prosody modeling. In human conversation, context is vital for determining the appropriate tone, emphasis, and rhythm. CSM addresses this by processing previous text and audio inputs to build a comprehensive understanding of the conversational flow. This context then informs the model's decisions regarding intonation, rhythm, and pacing, allowing it to choose among numerous valid ways to render a sentence. This capability allows CSM to sound more natural in dialogue by adapting its tone and expressiveness based on the conversation's history.

Training high-fidelity audio models is typically computationally intensive. CSM utilizes efficient training through compute amortization to manage memory overhead and accelerate development cycles. The model's transformer backbone is trained on every audio frame, capturing comprehensive context, while the audio decoder is trained on a random subset of frames, significantly reducing memory requirements without sacrificing performance.

Finally, the architecture of CSM leverages a Llama backbone from Meta, a testament to the power of transfer learning in AI. This robust language model foundation is coupled with a smaller, specialized audio decoder that produces Mimi audio codes. This combination allows CSM to benefit from the linguistic understanding capabilities of the Llama architecture while having a dedicated component focused on generating high-quality, natural-sounding audio.

Several key capabilities contribute to the exceptional performance and lifelike quality of the Sesame voice model. One of the most significant is its emotional intelligence. CSM is designed to interpret and respond to the emotional context of a conversation, allowing it to modulate its tone and delivery to match the user's mood. This includes the ability to detect cues of emotion and respond with an appropriate tone, such as sounding empathetic when the user is upset, and even demonstrating a prowess in detecting nuances like sarcasm.

Another crucial capability is contextual awareness and memory, CSM adjusts its output based on the history of the conversation, allowing it to maintain coherence and relevance over extended dialogues. By processing previous text and audio inputs, the model builds a comprehensive understanding of the conversational flow, enabling it to reference earlier topics and maintain a consistent style.

The model also exhibits remarkable natural conversational dynamics. Unlike the often rigid and stilted speech of older AI systems, CSM incorporates natural pauses, filler words like "ums," and even laughter, mimicking the way humans naturally speak. It can also handle the timing and flow of dialogue, knowing when to pause, interject, or yield, contributing to a more organic feel. Furthermore, it demonstrates user experience improvements such as gradually fading the volume when interrupted, a behavior more akin to human interaction.

The voice cloning potential of CSM is another highly discussed capability. The model has the ability to replicate voice characteristics from audio samples, even with just a minute of source audio. While the open-sourced base model is not fine-tuned for specific voices, this capability highlights the underlying power of the technology to capture and reproduce the nuances of individual voices.

Enabling a fluid and responsive conversational experience is the real-time interaction and low latency of CSM. Users have reported barely noticing any delay when interacting with the model. Official benchmarks indicate an end-to-end latency of less than 500 milliseconds, with an average of 380ms, facilitating a natural back-and-forth flow in conversations.

Finally, while currently supporting multiple languages including English, CSM's multilingual support is somewhat limited at present, with the model being primarily trained on English audio. There are plans to expand language support in the future, but the current version may struggle with non-English languages due to data contamination in the training process.

The emergence of Sesame's CSM has naturally led to comparisons with existing prominent voice models from companies like Open AI, Google, and others. In many aspects, Sesame has been lauded for its superior naturalness and expressiveness. Users and experts often compare it favorably to Open AI's ChatGPT voice mode, Google's Gemini, as well as more established assistants like Siri and Alexa. Many find CSM's conversational fluency and emotional expression to surpass those of mainstream models. Some have even described the realism as significantly more advanced, with the AI performing more like a human with natural imperfections rather than a perfect, but potentially sterile customer service agent.

A key strength of Sesame lies in its conversational flow. It is often noted for its organic and flowing feel, making interactions feel more like a conversation with a real person. The model's ability to seamlessly continue a story or conversation even after interruptions is a notable improvement over some other AI assistants that might stumble or restart in such situations.

However, there are potential limitations. The open-sourced version, CSM-1B, is a 1-billion-parameter model. While this size allows it to run on more accessible hardware it might also impact the overall depth and complexity of the language model compared to the much larger models behind systems like ChatGPT or Gemini. Some users have suggested that while Sesame excels in naturalness, it might be less "deep and complex" or less strong in following specific instructions compared to these larger counterparts. Additionally, the model seems to perform best with shorter audio snippets, such as sentences, rather than lengthy paragraphs.

Despite these potential limitations, Sesame introduces notable UX improvements. Features like the gradual fading of volume when the user interrupts feel more natural and human-like compared to the abrupt stop soften encountered with other voice assistants.

To provide a clearer comparison, the following table summarizes some key differences and similarities between Sesame (CSM) and other prominent voice models based on the available information:

This comparison suggests that Sesame's primary strength lies in the quality and naturalness of its voice interaction. While it might not have the sheer breadth of knowledge or instruction-following capabilities of larger language models, its focus on creating a truly human-like conversational experience positions it as a significant advancement in the field.

The exceptional realism and natural conversational flow of the Sesame voice model open up a wide array of potential applications across various industries and in everyday life. One of the most immediate and impactful areas is in enhanced AI assistants and companions. By creating more lifelike and engaging interactions, Sesame's technology could lead to AI companions that feel more like genuine conversational partners, capable of building trust and providing more intuitive support.

The potential for revolutionizing customer service is also significant. Imagine customer support interactions that feel empathetic and natural, where the AI can truly understand and respond to the customer's emotional state. This could lead to more positive customer experiences and potentially reduce operational costs for businesses.

Furthermore, Sesame's technology could greatly contribute to improving accessibility for individuals with disabilities, offering more natural and engaging ways to interact with technology through voice.

In the realm of content creation, CSM could be a game-changer for audiobooks, podcasts, and voiceovers. The ability to generate highly realistic voices with natural emotional inflections could make listening experiences far more engaging and immersive.

Education and training could also be transformed, with AI tutors and learning tools that can engage students in more natural and personalized ways.

The healthcare industry presents numerous possibilities. Applications in AI doctors for initial consultations, triage, and even generating medical notes during patient interactions could become more effective and user-friendly with a natural-sounding voice.

The integration of Sesame's voice model into smart devices and the Internet of Things (IoT) could lead to more natural and intuitive voice interfaces in cars, homes, and wearable technology like the lightweight eyewear being developed by Sesame themselves. This could move beyond simple commands to more fluid and context-aware interactions.

Augmented reality applications could also benefit, with natural voice interactions enhancing immersive experiences and providing a moreseamless way to interact with digital overlays in the real world.

The natural dialogue and low latency of CSM could streamline voice commerce, making voice-activated purchases a more viable and user-friendly option.

Finally, by analyzing conversations and user preferences, AI powered by Sesame could offer personalized content recommendations in a more natural and engaging way, strengthening brand connections and user engagement.

The remarkable realism of the Sesame voice model, particularly its voice cloning potential, brings forth significant ethical considerations that must be carefully navigated. One of the primary concerns is the risk of impersonation and fraud. The ability to easily replicate voices opens the door to malicious actors potentially using this technology to mimic individuals for fraudulent purposes, such as voice phishing scams, which could become alarmingly convincing.

The potential for misinformation and deception is another serious concern, AI-generated speech could be used to create fake news or misleading content, making it difficult for individuals to discern what is real and what is fabricated.

Interestingly, Sesame has opted for a reliance on an honor system and ethical guidelines rather than implementing strict built-in technical safeguards against misuse. While the company explicitly prohibits impersonation, fraud, misinformation, deception, and illegal or harmful activities in its terms of use, the ease with which voice cloning can be achieved raises questions about the effectiveness of these guidelines alone. This approach places a significant responsibility on developers and users to act ethically and avoid misusing the technology.

Beyond the immediate risks of misuse, there are also privacy concerns related to the analysis of conversations, particularly if this technology becomes integrated into everyday devices. Robust data security and transparency will be crucial to address these concerns and comply with regulations like GDPR.

Finally, the very realism of the voice model could lead to unforeseen psychological implications. As AI voices become increasingly human-like, some users might develop emotional attachments, blurring the lines between human and artificial interaction. The feeling of "uncanny discomfort" that can arise from interacting with something almost, but not quite, human is also a factor to consider.

A significant development in the story of the Sesame voice model is the decision by Sesame AI to release its base model, CSM-1B, as open source under the Apache 2.0 license. This move has profound implications for the future of voice technology. The model and its checkpoints are readily available on platforms like GitHub and Hugging Face, making this advanced technology accessible to developers and researchers worldwide.

The Apache 2.0 license is particularly significant as it allows for commercial use of the model with minimal restrictions. This has the potential to foster rapid innovation and research in the field of conversational AI, as the community can now build upon and improve the model, explore its capabilities, and discover new applications.

This open-source release marks a step towards the democratization of high-quality voice synthesis. For years, advanced voice technology has been largely controlled by major tech companies. By making CSM-1B available, Sesame is empowering smaller companies and independent developers who might not have the resources to build proprietary voice systems from scratch. This could lead to a proliferation of new applications and integrations of natural-sounding speech in various products and services, potentially inspiring creative implementations in unexpected places, from new cars to next-generation IoT devices.

To utilize the open-source CSM-1B model, certain requirements typically need to be met, including a CUDA-compatible GPU, Python 3.10 or higher, and a Hugging Face account with access to the model repository. Users also need to accept the terms and conditions on Hugging Face to gain access to the model files. It's important to note that the open-sourced CSM-1B is a base generation model, meaning it is capable of producing a variety of voices but has not been fine-tuned on any specific voice. Further fine-tuning may be required for specific use cases, including voice cloning for particular individuals.

The Sesame voice model, particularly its Conversational Speech Model (CSM), represents a significant leap forward in the field of AI voice technology. Its ability to generate speech with remarkable naturalness and emotional expressiveness has captured the attention of the AI community and sparked discussions about the future of human-computer interaction. The model's end-to-end multimodal architecture, advanced RVQ tokenization, and context-aware prosody modeling contribute to a level of realism that often surpasses existing mainstream voice models.

The potential applications of this technology are vast, spanning across AI assistants, customer service, content creation, healthcare, smart devices, and more. The heightened realism promises to create more intuitive and engaging experiences for users across various domains.

However, the power of Sesame's voice model also brings forth critical ethical considerations, primarily concerning the risks of impersonation, fraud, and the spread of misinformation through voice cloning. The reliance on ethical guidelines and an honor system underscores the importance of responsible development and use of this technology.

The decision by Sesame AI to open-source its base model, CSM-1B, under the Apache 2.0 license is a pivotal moment. This democratization of advanced voice technology has the potential to accelerate innovation, foster new applications, and empower a wider community of developers and researchers to contribute to the evolution of conversational AI.

In conclusion, Sesame AI is not just improving AI speech; it is setting a new standard for what is possible in human-computer interaction through voice. By pushing the boundaries of realism and naturalness, Sesame is shaping a future where our conversations with artificial intelligence can be more seamless, engaging, and ultimately, more human.

The Dawn of Believable AI Voices explores Sesame's advanced conversational speech model, highlighting its breakthrough in generating natural, expressive AI voices that enhance human-computer interactions.

Artificial Intelligence (AI) has evolved into a broad and multi-faceted field, with two prominent branches emerging as transformative forces in modern technology: Generative AI and Predictive AI. While both leverage advanced machine learning techniques, they serve different purposes and excel in distinct applications. This blog delves into the technical distinctions between generative and predictive AI, highlighting their underlying architectures, methodologies, and practical implementations across industries.

Generative AI is a subset of AI that focuses on creating new data instances resembling the training data. It leverages models that learn the underlying patterns and structures of input data, enabling them to generate outputs that are not merely replications but creative constructs. These outputs can range from images, text, audio, and even entire virtual environments.

Generative AI primarily utilizes unsupervised and self-supervised learning techniques. The key architectures powering generative AI include:

Generative AI has found applications across numerous industries:

Predictive AI, on the other hand, is focused on forecasting future events based on historical data. It is fundamentally about building models that can analyze patterns and trends within datasets to predict outcomes. Predictive AI is heavily used in analytics, risk assessment, and decision-making processes.

Predictive AI predominantly relies on supervised learning techniques where models are trained on labeled datasets. Key components of predictive AI include:

Predictive AI is widely utilized in:

When deciding which approach to adopt, consider the following:

In some advanced applications, both generative and predictive AI models can complement each other. For example, generative AI can create synthetic data that enhances predictive AI models' performance by providing more diverse training samples.

Both generative and predictive AI offer powerful tools for leveraging data, but their applications and methodologies differ significantly. Generative AI shines in creativity, content creation, and simulations, while predictive AI excels in forecasting, analytics, and strategic decision-making. By understanding these distinctions, businesses and technologists can make informed decisions on which approach aligns best with their objectives, ultimately driving innovation and efficiency across industries.

Artificial Intelligence (AI) has evolved into a broad and multi-faceted field, with two prominent branches emerging as transformative forces in modern technology: Generative AI and Predictive AI.

Yes AI is spreading like wildfire. It is revolutionizing all industries including manufacturing. It offers solutions that enhance efficiency, reduce costs, and drive innovation - through Demand prediction, real-time quality control, smart automation, and predictive maintenance. The list shows how AI can cut costs, reduce downtime, and surpass various roadblocks in manufacturing processes.

A recent survey by Deloitte revealed that over 80% of manufacturing professionals reported that labor turnover had disrupted production in 2024. This disruption is anticipated to persist, potentially leading to delays and increased costs throughout the value chain in 2025.

Artificial Intelligence (AI) can help us take great strides here - reducing cost and enhancing efficiency. Research shows that the global AI in the manufacturing market is poised to be valued at $20.8 billion by 2028. Let's see some most practical uses that are already being implemented:

Accurate demand forecasting is crucial for manufacturers to balance production and inventory levels. Overproduction leads to excess inventory and increased costs, while underproduction results in stockouts and lost sales. AI-driven machine learning algorithms analyze vast amounts of historical data, including seasonal trends, past sales, and buying patterns, to predict future product demand with high accuracy. These models also incorporate external factors such as market trends and social media sentiment, enabling manufacturers to adjust production plans in real-time in response to sudden market fluctuations or supply chain disruptions. Implementing AI in demand forecasting leads to better resource management, improved environmental sustainability, and more efficient operations.

Supply chain optimization is a critical aspect of manufacturing that directly impacts revenue management. AI enhances supply chain operations by providing real-time insights into various factors such as demand patterns, inventory levels, and logistics. By analyzing this data, AI systems can predict demand fluctuations, optimize inventory management, and streamline logistics, leading to reduced operational costs and improved customer satisfaction. For instance, AI can automate the generation of purchase orders or replenishment requests based on demand forecasts and predefined inventory policies, ensuring that manufacturers maintain optimal stock levels without overproduction.

Maintaining high-quality standards is essential in manufacturing, and AI plays a significant role in enhancing quality control processes. By integrating AI with computer vision, manufacturers can detect product defects in real-time with high accuracy. For example, companies like Foxconn have implemented AI-powered computer vision systems to identify product errors during the manufacturing process, resulting in a 30% reduction in product defects. These systems can inspect products for defects more accurately and consistently than human inspectors, ensuring high standards are maintained.

Mining, metals, and other heavy industrial companies lose 23 hours per month to machine failures, costing several millions of dollars.

Unplanned equipment downtime can lead to significant financial losses in manufacturing. AI addresses this challenge through predictive maintenance, which involves analyzing data from various sources such as IoT sensors, PLCs, and ERPs to assess machine performance parameters. By monitoring these parameters, AI systems can predict potential equipment failures before they occur, allowing for timely maintenance interventions. This approach minimizes unplanned outages, reduces maintenance costs, and extends the lifespan of machinery. For instance, AI algorithms can study machine usage data to detect early signs of wear and tear, enabling manufacturers to schedule repairs in advance and minimize downtime.

AI enhances product design and development by enabling manufacturers to explore innovative configurations that may not be evident through traditional methods. Generative AI allows for the exploration of various design possibilities, optimizing product performance and material usage. AI-driven simulation tools can virtually test these designs under different conditions, reducing the need for physical prototypes and accelerating the development process. This approach not only shortens time-to-market but also results in products that are optimized for performance and cost-effectiveness.

Several leading manufacturers have successfully implemented AI to enhance their operations:

The integration of AI in manufacturing is not just a trend but a necessity for staying competitive in today's dynamic market. By adopting AI technologies, manufacturers can enhance operational efficiency, reduce costs, and drive innovation. As the industry continues to evolve, embracing AI will be crucial for meeting the demands of the ever-changing manufacturing landscape.

In conclusion, AI offers transformative potential for the manufacturing industry, providing practical solutions that address key challenges and pave the way for a more efficient and innovative future. Want to make a leap in your manufacturing process? Let's do it!

The integration of AI in manufacturing can enhance operational efficiency, reduce costs, and drive innovation - with predictive analysis, supply chain optimization and much more. Read 5 such use cases of AI in the manufacturing industry.

Everyone wants to develop an AI engine for themselves. Everyone has a valid use case where they can integrate an AI system to bring in multiple benefits. Generative AI, multimodal models, and real-time AI-powered automation have unlocked new possibilities across industries. But the question is how to pull it off. What will it cost? Is it better to hire a team or outsource? What are the criteria to keep in mind?

First of all, developing AI solutions is no longer just about machine learning models - it involves leveraging pre-trained LLMs, fine-tuning models for specific applications, and optimizing AI deployments for cost and efficiency. Hence a structured approach to cost estimation, pricing models, and return on investment (ROI) calculations are necessary.

The cost of AI development can vary based on several factors, including the complexity of the model, data requirements, computational infrastructure, integration needs, and the development team's expertise.

Let’s look deeper into each of them.

AI models today range from fine-tuned pre-trained models (e.g., OpenAI GPT-4, Gemini, Claude) to enterprise-specific LLMs trained on proprietary data. The complexity of the model directly impacts development costs.

In this context, the cost implications can include:

But in general, the complexity and cost has drastically come down in comparison to previous years, thanks to instantaneous advances in Gen AI models.

Generative AI relies on high-quality, curated datasets for domain-specific fine-tuning.

AI models require significant computing resources, whether running on cloud GPUs or fine-tuning with on-premise AI accelerators.

Integrating AI solutions into existing IT environments can be challenging due to compatibility issues. Thus costs can arise from:

The team structure typically includes:

Many startups and mid-sized businesses outsource AI development to reduce costs, leveraging pre-trained models and cloud-based AI solutions instead of building models from scratch.

AI models require continuous fine-tuning, monitoring, and scaling.

AI development in 2025 is more accessible yet cost-intensive, depending on the level of customization. GenAI, API-based AI services, and fine-tuned models are making AI development less complex, faster and more cost-effective. For this, companies must carefully evaluate the resources they are getting for their money and parallelly look into pricing models to justify AI investments.

At Techjays, we are at the cusp of the AI revolution. We were one of the first companies to focus fully on the AI domain after a decade of service in the IT industry. Here at Techjays, we specialize in AI-driven product development, from fine-tuned LLM solutions to enterprise AI integrations.

So it's time to get to work! Let’s build your idea with AI.

AI solutions involve leveraging pre-trained LLMs, fine-tuning models for specific applications, and optimizing AI deployments for cost and efficiency. Hers's a structured approach to cost estimation, pricing models, and return on investment (ROI) calculations

Testing has become the soul of modern software development - because it ensures functionality, reliability, and user satisfaction. As the scope and complexity of software systems expand, quality assurance (QA) has become more critical than ever. Businesses continue to embrace digital transformation and the future of QA is dynamic, driven by AI and other technological advancements and changing business needs.

As 2024 comes to a draw, it's time to deck the halls with key trends that can shape the QA landscape in 2025:

1. AI and Machine Learning in QA

AI and machine learning (ML) are revolutionizing QA processes by enabling predictive analytics, anomaly detection, and intelligent test case generation. In 2025, the adoption of AI-driven tools will escalate, offering capabilities such as:

AI integration in QA enables faster releases and ensures higher reliability, particularly in Agile and DevOps pipelines.

The traditional testing lifecycle is evolving with a shift-left approach, emphasizing early testing in the development process. By 2025, shift-left testing will become more robust through:

Shift-left testing aligns with Agile principles, promoting early defect detection and cost-efficient development cycles.

Hyperautomation combines AI, RPA (Robotic Process Automation), and orchestration tools to automate complex testing workflows. By 2025, hyper-automation will redefine QA by:

This trend ensures scalability, consistency, and efficiency, particularly for enterprises dealing with large-scale software systems.

The proliferation of IoT and edge computing introduces new testing challenges. By 2025, QA will expand to address:

QA strategies will focus on simulation environments to mimic real-world conditions, ensuring IoT applications perform as intended.

As cyberattacks become more sophisticated, cybersecurity testing is paramount. By 2025, QA will integrate advanced security measures, including:

Cybersecurity testing will transition from a specialized activity to a core QA function, ensuring secure software delivery.

Traditional performance testing focuses on identifying bottlenecks post-development. In 2025, performance engineering will take precedence, emphasizing:

Performance engineering ensures that applications meet user expectations, even under high-stress scenarios.

QA will evolve into quality engineering (QE), focusing on quality ownership across the software lifecycle. By 2025:

QE shifts the focus from defect detection to defect prevention, fostering a culture of quality ownership.

Cloud adoption is reshaping software development, necessitating specialized testing strategies. By 2025, QA will adapt to:

Cloud-native testing ensures reliability and efficiency in increasingly complex cloud ecosystems.

As blockchain technology becomes mainstream, QA teams must address its unique challenges. By 2025:

Blockchain testing will require specialized skills and tools to address this emerging domain.

AI applications must be transparent, unbiased, and ethical. By 2025, QA teams will incorporate:

QA for ethical AI will be a critical component of responsible software development.

Quantum computing is on the horizon, and its unique properties will challenge traditional QA methods. By 2025:

While still nascent, quantum testing will demand novel tools and approaches.

By 2025, TDM will become more sophisticated, enabling QA teams to handle diverse testing needs. Key trends include:

Effective TDM ensures accurate testing while addressing data security and compliance concerns.

The rapidly changing QA landscape requires ongoing skill development. By 2025:

Continuous learning ensures QA professionals remain relevant in a technology-driven world.

The future of QA is transformative, driven by innovations in AI, cloud computing, IoT, and beyond. As software systems become more complex, QA must evolve from traditional testing to a holistic approach encompassing quality engineering, cybersecurity, and ethical AI. By embracing these trends, organizations can ensure robust, scalable, and user-centric software delivery, staying ahead in an ever-competitive digital landscape.

Testing has become the soul of modern software development - because it ensures functionality, relia...

The Future of AI in Augmented Reality (AR) and Virtual Reality (VR) Applications

Hear the podcast from Techjays

When Augmented Reality (AR) and Virtual Reality (VR) themselves are novel concepts for many, the integration of Artificial Intelligence (AI) is simply going to revolutionize the way humans communicate with digital environments. With heightened sensory immersion, AI-powered AR and VR applications can simply transform industries and change the way we have been working with it - anywhere from healthcare to education to gaming or manufacturing.

This blog is intended to discuss —the phenomenon that may arise with the inclusion of AI into AR/VR and emerging possibilities.

The Convergence of AI with AR and VR enables systems to analyze and respond to real-world inputs sensitively, creating dynamic and interactive user experiences.

To cite some real-world examples:

In healthcare, The AccuVein AR tool, used in healthcare, employs AI to analyze and overlay vein locations on a patient's skin for easier and more accurate injections or IV placements.

Similarly in industrial maintenance, Bosch’s Common Augmented Reality Platform (CAP) uses AI to recognize machine parts and overlay step-by-step repair instructions, streamlining maintenance tasks for industrial workers.

AR and VR technologies with the joined force of AI are transforming numerous industries:

The contribution that AI-driven AR and VR applications can make in medical training, diagnostics, and treatment is immense:

AI-powered AR and VR have the potential to completely redefine educational experiences:

In industrial settings, productivity and safety are where AI-powered AR & VR can contribute considerably:

AI-integrated AR and VR can redefine consumer shopping experiences:

The entertainment industry benefits much from the advent of AI in AR and VR:

AR and VR at TECHJAYS

How TechJays uses Unity Engine to develop Immersive VR Experience for Meta Quest

At TechJays, we're excited to share how our expertise in Unity Engine and Meta Quest VR headsets allows us to create impactful and immersive VR solutions. As we dive into the technical aspects of our work, we'll highlight how we use Unity Engine to build interactive and realistic VR experiences, demonstrating our skill set and approach.

Why Unity Engine for VR Development?

Unity Engine is a powerful tool for VR development due to its versatility and extensive feature set. For Meta Quest VR headsets, Unity provides a robust platform that supports high-performance rendering, intuitive interaction design, and seamless integration with VR hardware. We use Unity Engine to deliver top-notch VR solutions:

1. Creating Realistic Interactions

Realistic interactions are fundamental to a convincing VR experience. We leverage Unity’s physics engine to simulate natural interactions between users and virtual objects.

2. Developing Aesthetic Environments

Visually good-looking environments are crucial for engaging VR experiences. Unity’s tools help us create quality environments that react to user actions in real time.

3. Implementing User Interfaces

Effective user interfaces (UIs) in VR need to be intuitive and easy to navigate. Unity provides several tools to build and optimize VR UIs.

4. Optimizing Performance

Performance optimization is critical for delivering a smooth experience on VR headsets. Consistent frame rates are crucial in VR to avoid motion sickness. Unity provides several techniques to optimize the performance for better VR experiences.

We launched our first VR application to Meta Store: an interactive walkthrough of a VR environment allowing the user to explore a virtual environment with complete freedom and interaction.

The app was made using the Unity game engine with Meta XR SDK for Meta Quest headsets.

The app lets you explore a few immersive office interior environments in different daylight cycles by navigating the virtual space using intuitive controls like teleportation, movement, and snap rotation. The experience is further enhanced by specific interactive features, like playing video, grabbing objects, and pulling objects to hand.

Find it at the Meta Store Link: https://www.meta.com/experiences/techjays-office-tour/8439123209473841/

AR at TechJays: Transforming Experiences with Augmented Reality

At TechJays, we are committed to harnessing the power of Augmented Reality (AR) to craft interactive and innovative experiences across various industries. By leveraging advanced AR development tools such as Unity Engine and plugins such as Unity AR Foundation, ARCore, ARKit, and Vuforia, we create impactful AR applications that seamlessly blend the digital and physical worlds. These state-of-the-art technologies allow us to deliver precise, immersive AR experiences, enabling our clients to engage with dynamic, real-time interactions in both indoor and outdoor environments.

The confluence of AI into AR and VR is launching digital experiences into an era of unparalleled innovation. AI algorithms, computational hardware, and seamless connectivity will accelerate the adoption of these technologies across various industries. As we stand on the brink of this transformation, we at TechJays are committed to using our Unity Engine expertise to deliver high-quality VR solutions tailored to your needs. Our technical proficiency, combined with our understanding of Meta Quest VR capabilities, allows us to create immersive and effective VR experiences.

Get in Touch

If you’re interested in exploring how our VR development skills can benefit your business, contact TechJays today. We’re here to help you leverage the power of VR to achieve your goals and elevate your operations.

When Augmented Reality (AR) and Virtual Reality (VR) themselves are novel concepts for many, the integration of Artificial Intelligence (AI) is simply going to revolutionize the way humans communicate with digital environments.

Among other industries, it is especially surprising to see AI transforming the healthcare sector at a rapid pace. AI in healthcare management is driving revolutions in diagnostics, patient management, and predictive analytics. Unlike pre-AI times, AI-powered applications now have the bandwidth to deal with large datasets, complex algorithms, and real-time insights which in turn simplifies medical decision-making. Let's see the different ways AI is leading this transformation with some real-time instances from the Techjays’ foray into AI in healthcare projects.

1. Diagnostic Applications: Unleashing the Power of Machine Learning

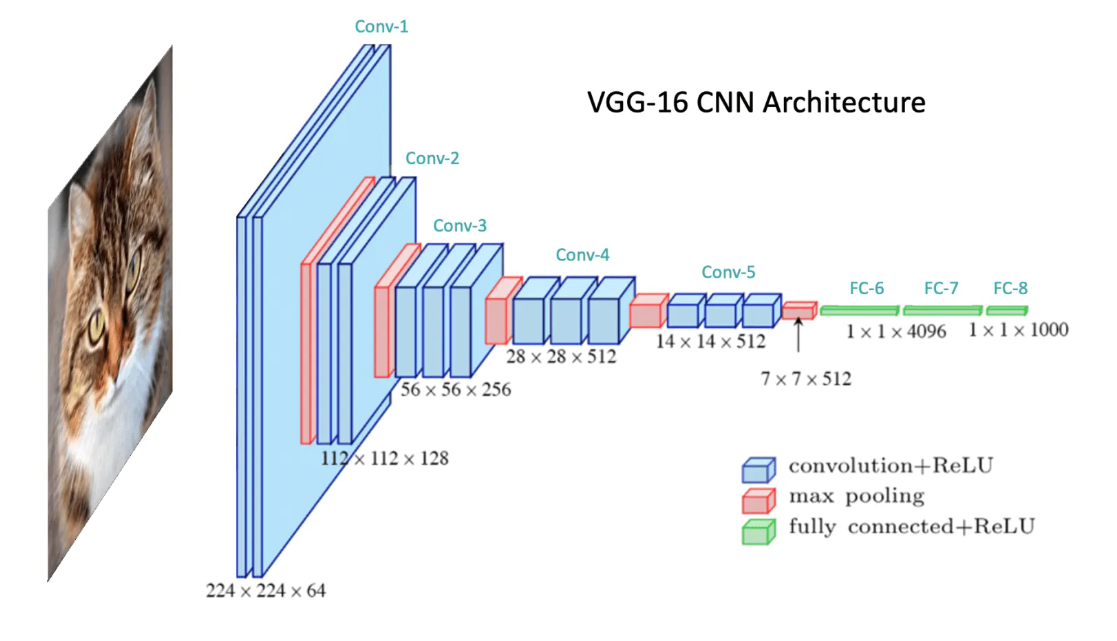

The current role of AI in enhancing medical diagnostics almost entirely lies with machine learning (ML) models - and in it, Convolutional Neural Networks (CNNs).

CNN has shown ground-breaking results in analyzing medical images like radiology reports. AI models that are trained on humongous amounts of radiology reports can detect pathologies . Be it tumors or fractures, these models are trained to read reports with accuracy rates on par with, or even exceeding, those of human radiologists.

Let's see some more specific examples.

Computer Vision in Medical Imaging: Similar to radiology reports, AI models can also process CT scans, MRIs, and X-rays using advanced feature extraction techniques. Data augmentation is also often employed to improve the analysis of limited medical datasets.

Natural Language Processing (NLP) for Electronic Health Records (EHRs): In the process of analyzing medical records, unstructured texts in electronic health records may be a big hindrance. NLP algorithms can extract clinical information from such unstructured text in EHRs - thereby enlightening physicians with important diagnostic insights. Today, transformer models like BERT and GPT are being fine-tuned to understand medical terminologies. This can help analyze medical records - unstructured texts in electronic health records.

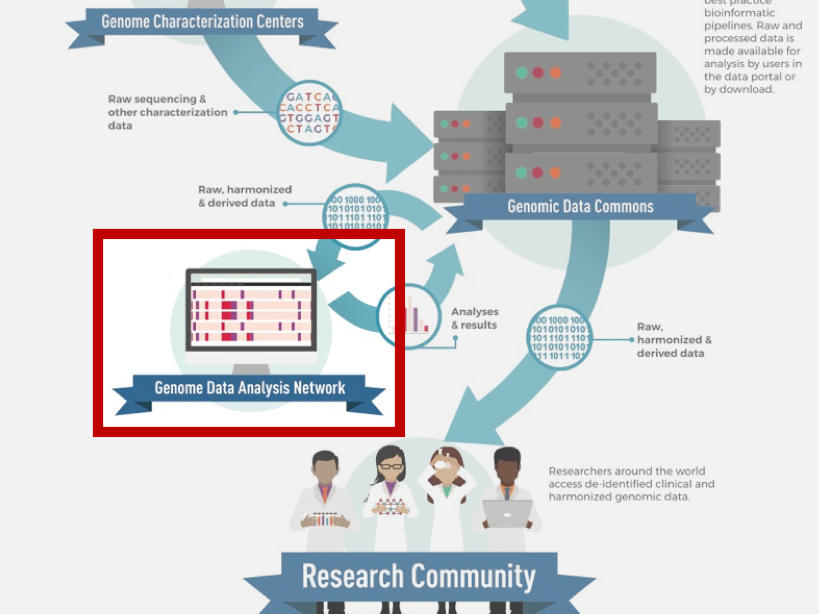

Genomic Data Analysis: AI is revolutionizing genomics as well, which is the study of an organism’s genetic material and how that information can be applied in cases like identifying disease predispositions. Analyzing genetic sequences is crucial in finding genetic mutations resulting in hereditary diseases and algorithms like Random Forests. Deep learning networks are showing exceptional efficiency in recognizing and analyzing them. With the power of AI, we are very close to looking at a future free of genetic diseases.

Potential Challenges:

Data Variability: The multiplicity of medical imaging devices and variations in data labeling is currently a challenge for using general AI models. Domain adaptation techniques may be required in such cases to handle variability.

Regulatory Constraints: AI systems need to undergo rigorous validation to meet the FDA's guidelines. This necessitates stringent performance monitoring to ensure safety and effectiveness.

Next, let's look at how AI transforms Patient Management processes

2. Patient Management: Elevating Personalized and Predictive Care

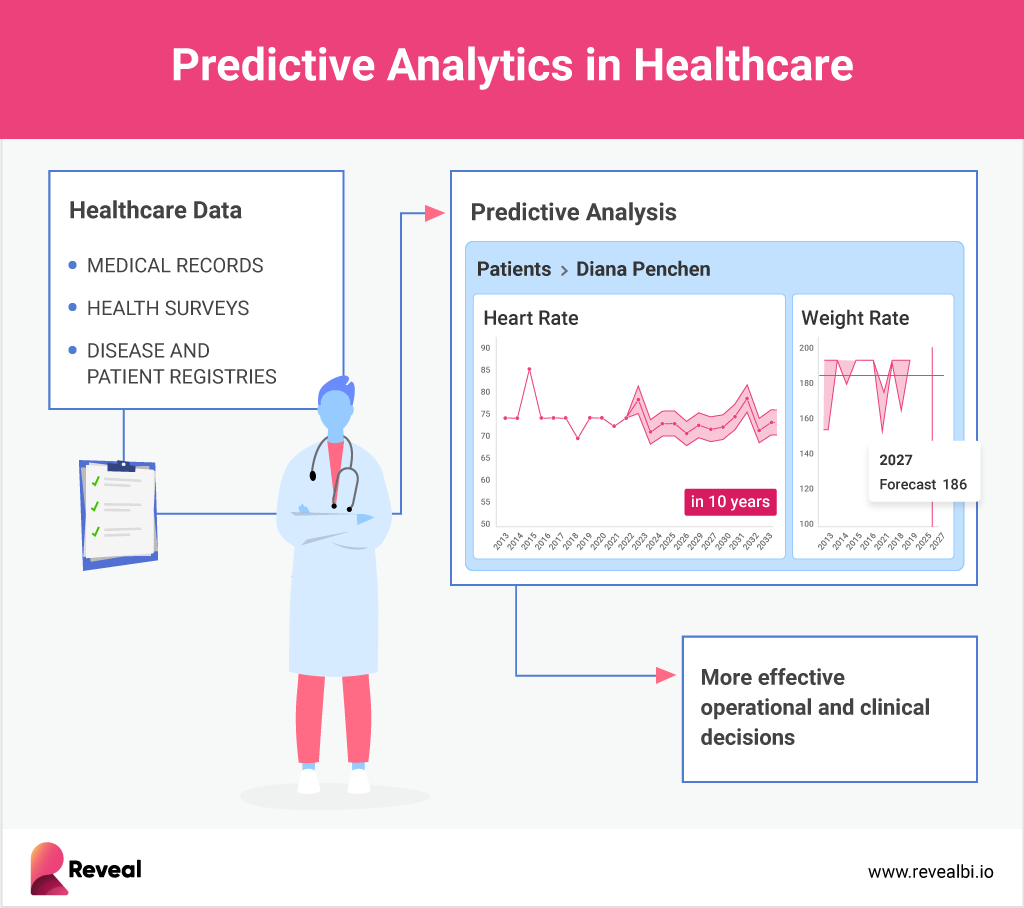

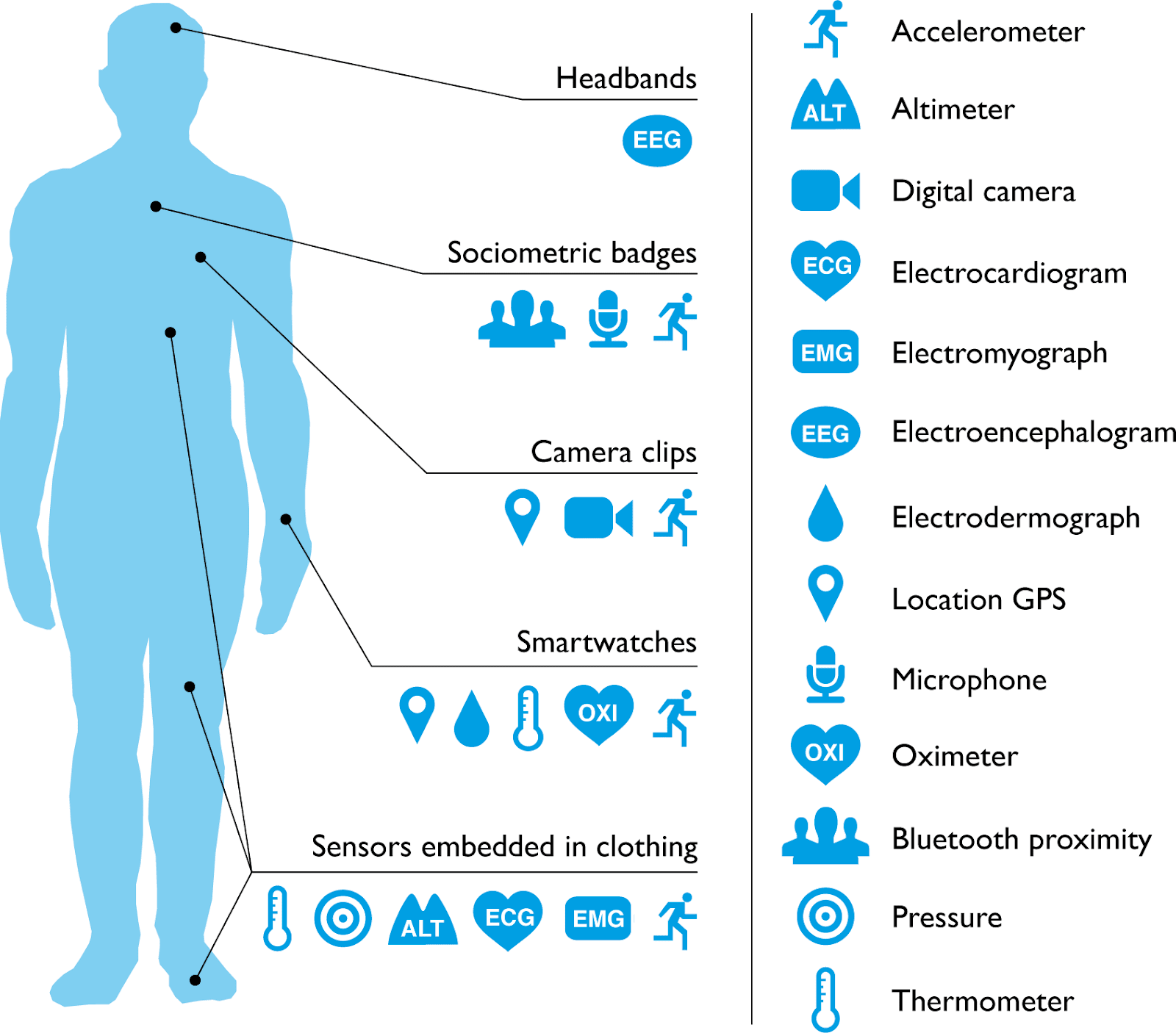

Predictive modeling is a significant benefit of AI in healthcare where AI systems can forecast disease progression and even suggest personalized treatment courses. Along with these two, Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) models can analyze data from wearable monitoring devices that track chronic conditions and send alerts based on prescribed levels.

Predictive Analytics: AI algorithms in healthcare projects can analyze patient history and predict patient outcomes. Today, deep reinforcement learning and Gradient boosting machines are used widely in conditions like sepsis or heart failure in making real-time decisions.

- Virtual Health Assistants: Chatbots in AI health apps support patients by answering medical queries and assisting in scheduling appointments. These models can be and are usually trained on extensive volumes of medical literature so that it is adept in understanding and responding in a clinical context.

- Telemedicine Platforms: AI is an expert in automating administrative tasks such as transcribing consultations and triaging patients. Transcribing consultation is based on using speech-to-text algorithms. Triaging patients works by using Bayesian networks on their symptoms.

Challenges in Patient Management:

- Data Privacy and Security: Medical records are highly sensitive data and hence handling them requires complying with stringent regulations like HIPAA. Such regulations are inevitable while incorporating AI in healthcare projects and require implementing robust encryption methods to preserve patient confidentiality.

- Bias and Fairness: Training AI models need to be a very careful exercise. Training on imbalanced datasets may perpetuate healthcare disparities. To mitigate such biases it is important to employ techniques like model fairness optimization and adversarial debiasing.

Model Fairness Optimization is a set of techniques aimed at reducing biases in machine learning models to ensure fair and equitable treatment across varying groups.

Adversarial Debiasing is also an in-processing technique used to reduce model bias where a secondary model is trained simultaneously to predict the characteristic that should not unfairly influence predictions.

3. AI in Clinical Workflows

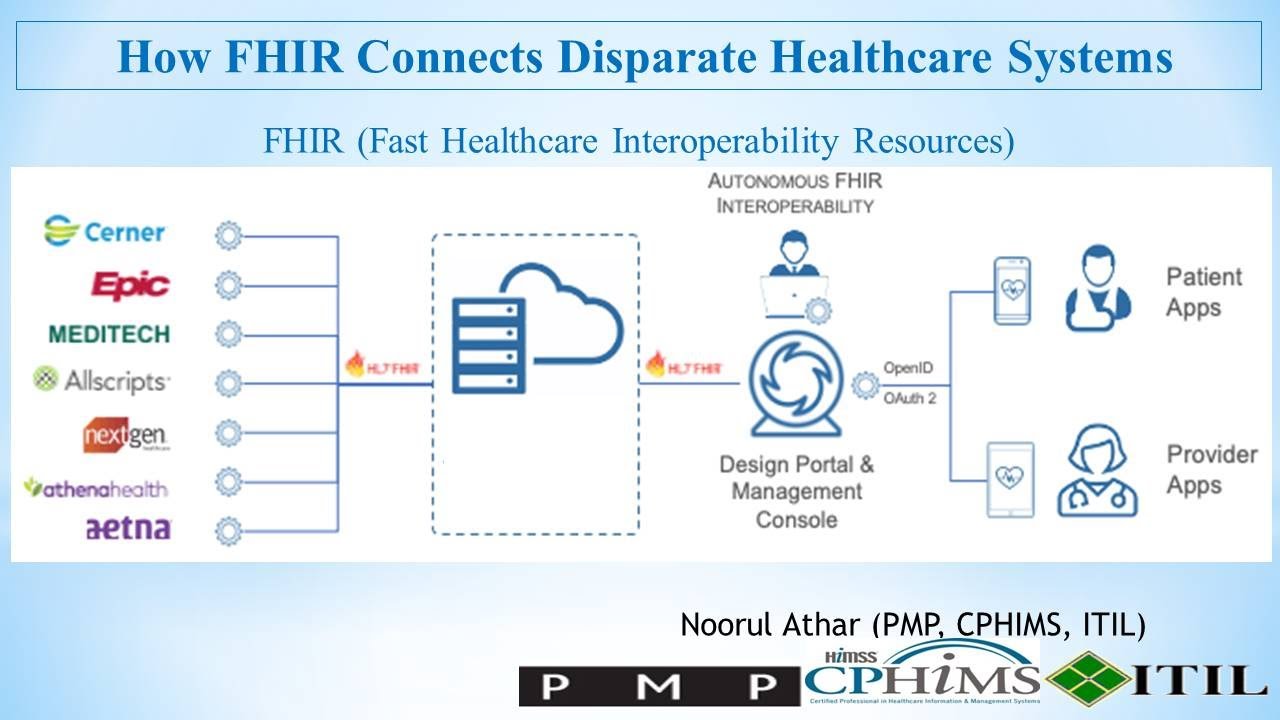

Interoperability with existing healthcare systems is an inevitable mantra when it comes to integrating AI into clinical environments.

Fast Healthcare Interoperability Resources (FHIR) standards facilitate data exchange between AI and EHR systems. However, in a practical scenario, real-time data processing can show latency. This can be addressed using high-throughput, low-latency data architectures.

Edge Computing in Healthcare: Edge computing is essential in healthcare to manage the volume and velocity of incoming data. In edge computing, data is processed closer to its source, thus reducing latency and enhancing response times. This is especially important for critical applications like patient monitoring in intensive care units.

- Federated Learning: This is a decentralized ML approach that trains AI models across multiple hospitals without compromising sensitive data. Here, to ensure data privacy while training models, techniques like differential privacy and secure multiparty computation are used.

Challenges in Clinical Integration:

- Interoperability: AI in healthcare projects need to adhere to HL7 standards for data exchange and must be compatible with legacy systems. For this, middleware solutions and API-driven integrations might be necessary.

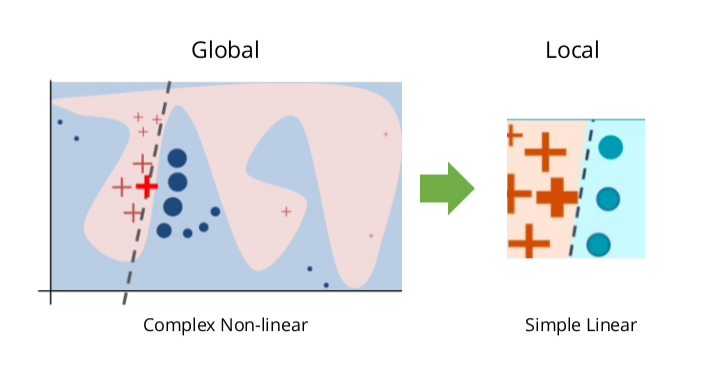

- Model Interpretability: Clinicians and practitioners need to understand the logic behind AI-driven recommendations and for this, might often need explainable AI (XAI) models. SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-Agnostic Explanations) are techniques that can provide transparency in making model decisions.

4. Ethical Considerations and the Future

As we deal with incorporating AI in healthcare projects, it is crucial to remember that it is a sensitive domain and that there are significant ethical questions about accountability and transparency. It is essential to establish ethical frameworks and guidelines for the responsible deployment of AI technologies.

- Explainable and Transparent AI: Developing interpretable models is more of an ethical imperative as much as it is a technical requirement. This can ensure trust in AI systems. To create transparent deep-learning models, research into XAI (explainable AI) is underway.

- Autonomous Decision-Making: Giving AI models the imperative to make autonomous decisions always raises liability concerns. Ensuring human oversight and developing ethical guidelines for AI deployment in clinical settings become critical.

Healthcare Tech at Techjays:

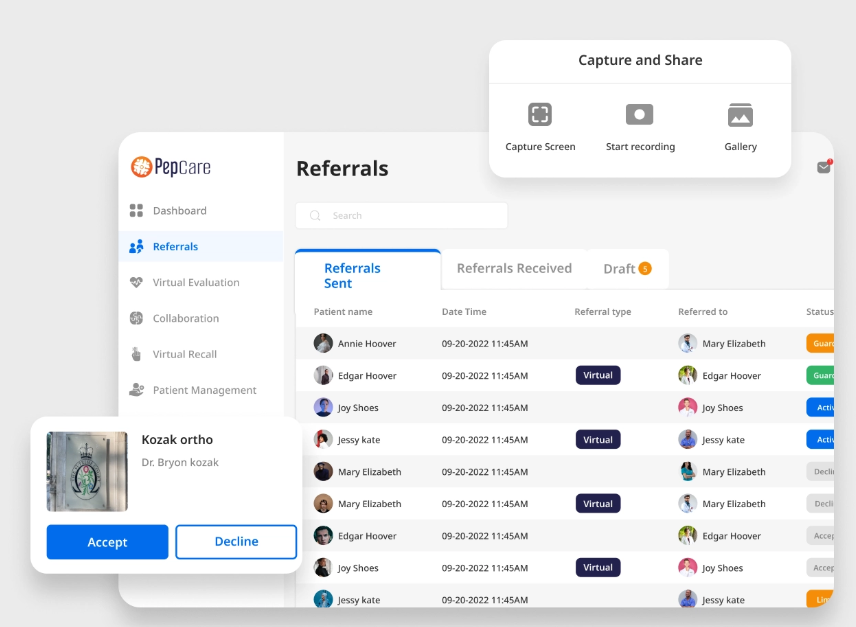

Our healthcare tech client, Pepcare, required a platform to enhance collaboration between doctors and patients and dons multiple collaboration and referral management tools by leveraging wisely on technology and AI.

The vision of the creators was to create a seamless solution that simplifies administrative tasks, facilitates virtual consultations, and expands the network of dental practitioners.

The platform required to digitize every communication mode and medical document and transform the patient referral process. This was to help doctors to refer patients to other doctors and share all medical records and patient history through the HIPAA-secure platform.

Techjays got into creating the platform, where apart from providing seamless working, it was also imperative to address the increasing number of cyber threats and ensure security and privacy as the platform dealt with extremely sensitive medical data.

Additionally, the need to include additional features such as HIPAA compliance required us to go the extra mile.

Our platform enabled Medical practitioners to create groups among themselves where they can discuss cases, collaborate, and utilize expertise in different specialties.

In addition, the platform also included a feature called the Patient Vault for patients, where patients can have all their old and new medical records, prescriptions, and reports, minimizing any chance of misplacing but accessible whenever needed. This turned out to be a great way to maintain a patient's medical history.

The platform also includes all features necessary for doctors to impart virtual care, especially for follow-up evaluations - in-built screen recording to explain medical records like X-rays and upload educational and best practice videos so that patients can access them at any time.

Today, using our platform both patients and doctors can create accounts, and patients can search for doctors and look up ratings and reviews too.

Next up, it is an AI-powered level-up for PepCare built by Techjays!!!

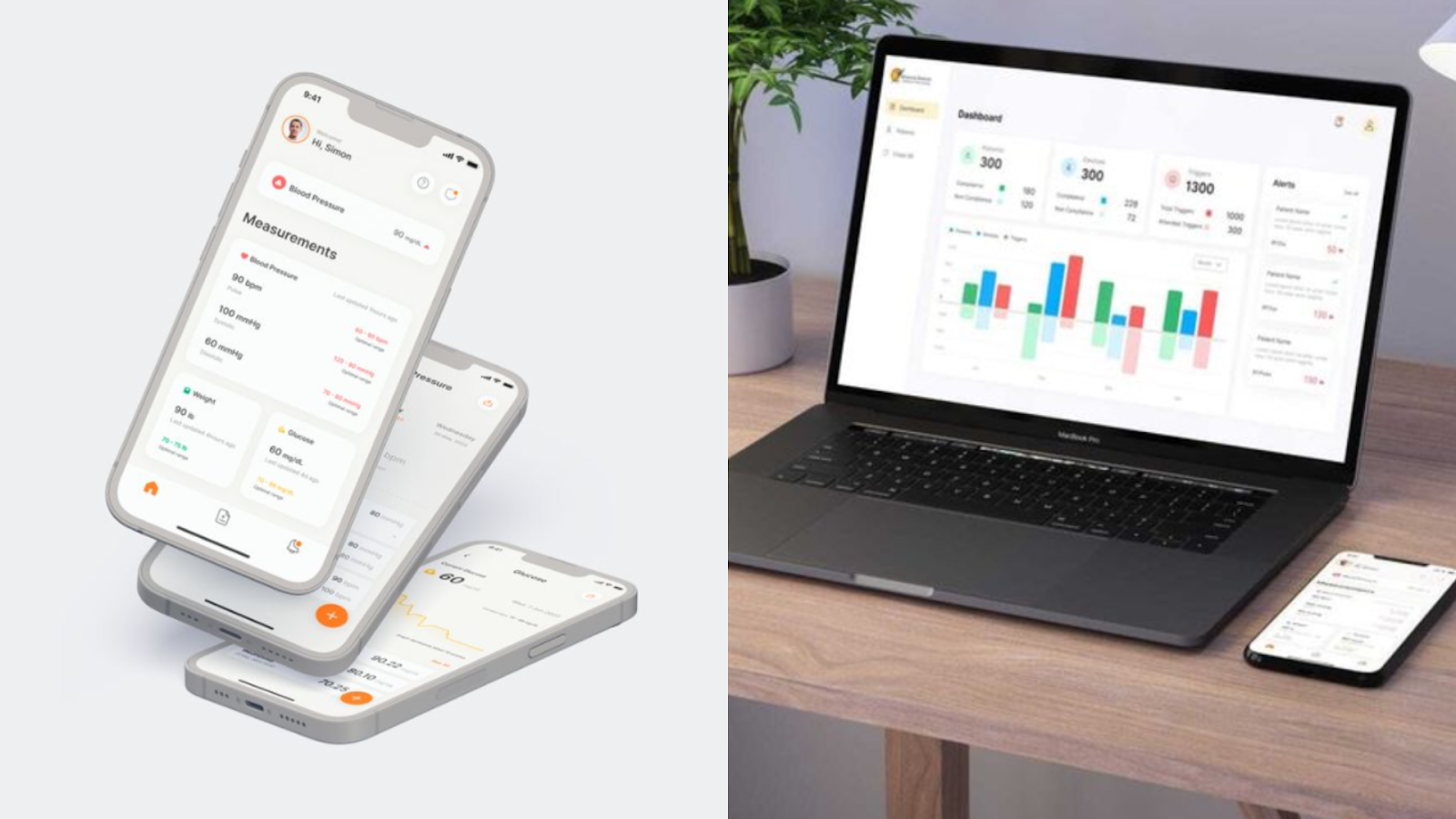

In this journey, the Advanced Institute for Diabetes and Endocrinology required Techjays to create a platform specifically for endocrinology patients, a platform that would capture patients’ health metrics, such as blood pressure, weight, blood sugar, etc.

The experts at Techjays created an interface where output from a physical monitoring device was synced to a patient’s smartphone, and from there to a dashboard was created for the endocrinologists.

The product does end-to-end patient handholding from prescriptions to sending alerts when the metrics go beyond stipulated levels. This product is also gearing up to incorporate AI-powered processes now.

The Inevitable Foray into AI

The unprecedented potential of AI in healthcare management be it in diagnostics, patient management, and personalized medicine is amazing. However, many technical challenges, particularly those ensuring data security and upholding ethical standards will require addressing. As research and technology evolve, collaboration between AI developers, healthcare professionals, and regulators will be essential to realize the full potential of AI in healthcare.

Among other industries, it is especially surprising to see AI transforming the healthcare sector at a rapid pace. AI in healthcare management is driving revolutions in diagnostics, patient management, and predictive analytics.

Integrating Artificial Intelligence (AI) in Quality Assurance (QA) is reimagining the software development lifecycle for good. Painstakingly creating and running test cases by hand are things of the past, with all its delays and human errors. Today, AI is stepping in, automating tedious tasks, predicting issues before they pop up, and letting QA teams focus on the big picture.

Below, we list ten ways in which AI-powered tools are/can revolutionize the QA processes in software development:

1. Automating Test processes

Automatically Create Test Cases: One can create test cases automatically by using AI-driven platforms like Katalon, Mabl, and Testim. Such tools leverage natural language processing (NLP) and machine learning (ML) to create these test cases based on user interactions and requirements.

This not only helps in speeding up test creation but also facilitates collaboration by non-technical team members to contribute to QA, enhancing the scope of the testing.

Dynamic Test Case Generation: Dynamic test cases can also be created so that the testing processes are aware of the latest changes in the app, even as new updates roll out. Tools like Applitools help generate test cases dynamically. This can expand the QA team's coverage significantly, helping them avoid missing edge cases.

Usage experience at Techjays:

Model-Based Testing: We have used AI for model-based testing to simulate complex workflows and predict edge cases, creating scenarios that mimic real-world user behavior.

Behavior-Driven Development (BDD) Support: We integrate AI-driven automation frameworks with BDD tools like Cucumber, allowing QA teams to auto-generate tests from BDD feature files.

2. Predictive Analysis for Defect Prevention

Predictive Defect Analytics: AI models can assimilate and analyze any available volume of historical data and generate predictions of potential defects that can arise before they arise. This obviously helps developers to address issues early, take precautions, and avoid expensive rework. Such models are adept at identifying trends and patterns from past data, and predictive analytics of defects can majorly minimize high-risk vulnerabilities.

Real-time Anomaly Detection: Faster detection of hidden bugs during testing is possible by using AI-based tools like Appvance and Functionize which use anomaly detection algorithms to identify irregularities. This real-time identification of errors can accelerate response times, preventing an escalation of minor issues into major problems.

Usage experience at Techjays:

Risk-Based Testing with AI: AI can prioritize test cases based on risk assessment and we have incorporated it at Techjays to help QA teams focus on areas that have the highest potential for defects, especially as applications scale.

Using Deep Learning for Root Cause Analysis: We use deep learning models to automate root cause analysis, learning from previous defects and helping engineers pinpoint the source of recurring issues.

3. Visual and Cognitive Testing

Visual Testing: Applitools Eyes is a visual testing tool that can detect discrepancies in the UI using AI, even minor ones that traditional testing misses. These tools identify inconsistencies in UI pixel by pixel, by comparing screenshots across devices. In cases where you require multi-device compatibility, these tools are significantly valuable, ensuring a uniform user experience across platforms.

Cognitive QA: The process of cognitive QA involves conducting simulations of human-user interactions. Analysis of the application’s response is then done to predict user behavior. These insights can help in enhancing user experience (UX) , let developers better understand user pain points, and allow them to make improvements that make a difference with real users.

4. NLP and Self-healing Mechanisms

Self-evolving Tests: Maintenance of various test processes and keeping it at pace with every emerging product update was a tedious phase in QA systems. But AI’s self-healing capability allows tests to adjust by themselves, to changes in the UI, be it button adjustments or layout changes.

Selenium Grid and Testim are comprehensive platforms that provide self-healing tests. This kind of adaptability keeps testing running smoothly with the least manual intervention and updates.

Natural Language Processing (NLP) in Test Scripts: As NLP algorithms can interpret human language, they can even capacitate non-technical stakeholders to create tests without any prior coding knowledge. In Katalon, script generation can be done based on plain language input thanks to integrated NLP. The possibility of collaboration that this opens up in the testing processes is just huge and more effective, as it involves cross-functional teams.

Usage experience at Techjays:

Multi-Language Support Using NLP: We use AI with NLP to generate test cases in multiple languages, which is especially useful for us for global applications that need localization testing.

Context-Aware Self-Healing Mechanisms: Cntext-aware AI can better handle changes in dynamic content we use it at Techjays to enable self-healing scripts to adjust to complex, data-driven UI components.

5. Speeding Up Regression Testing

Automated Regression Testing: This is a revolutionary step-up that the application of AI in QA has achieved – Reducing the time spent on regression testing and consequently facilitating faster updates and releases. Tools like Mabl and Tricentis can run multiple test suites simultaneously by automating regression testing and accelerating the feedback loop.

Continuous Testing Integration: Real-time ensuring of quality is what can be achieved by integrating testing with CI/CD pipelines, where after every code change, AI tools automatically trigger tests.

6. AI-driven Performance Testing

Performance Bottleneck Detection: Analysing performance metrics across various components to track different bottlenecks is a crucial step and tools like Dynatrace are adept in using AI to predict performance issues. As this is a data-driven approach, it helps development teams achieve performance efficiency and fine-tune their product.

Real-time Monitoring and Insights: AI-driven tools can monitor an application and its performance under various conditions including stress using real-time data and bringing up QA issues to the team. This minimizes production failures and enables creators to implement corrective actions immediately, ensuring a smooth experience even during peak loads.

Usage experience at Techjays:

Self-Tuning Performance Testing: We use AI to auto-tune testing parameters like load, concurrent users, and transaction rate based on real-time performance data.

7. Enhanced Defect Classification

Root Cause Analysis with AI: The development team always needs to prioritize issues, addressing critical ones first. Tools like QMetry and Leapwork help classify defects based on their resulting impact. This helps prioritize tasks correctly, thus enabling smarter resource allocation.

Automated Defect Logging: Automatic logging and then categorization of defects saves QA teams a huge amount of time and at the same time improves defect traceability across the software’s lifecycle. Automating this task enables QA teams to focus on resolving issues rather than documenting them.

8. Boosting Coverage

Prioritization: Identifying high-risk areas and prioritizing tests for such features that may be most likely to fail can be done by Machine Learning algorithms. This can increase test coverage by leaps but without putting extra workload on the team.

Optimization of Coverage: On top of critical issues, AI tools like Hexaware and QARA map out uncovered test areas as well, ensuring that critical functionalities are not overlooked. Such intelligent coverage mapping can expand Coverage.

9. Some use cases from the real world

In Financial Services: Finance apps are liable to many compliance and regulatory frameworks and aligning with each of these frameworks may require handling complex testing scenarios. Platforms like Functionize can help financial institutions ensure regulatory compliance.

In E-commerce: Seamless user experience, even during peak hours, is what an E-commerce platform envies. Such platforms can use AI tools for customer-focused testing. AI-powered visual testing tools are champions in tracking display issues across various devices.

10. Tools and Trends Shaping AI in QA

Generative AI in QA: Generative AI is the latest talk of the town which can multiply efficiency in any use case where AI can perform. Tools like Copado and Mabl utilize generative AI to create complex test scenarios which can help increase the depth and accuracy of testing.

AI QA in the Cloud: BrowserStack and Perfecto are cloud-based AI QA platforms that reduce infrastructure needs for testing, provide scalable testing environments, and speed up the entire testing process.

Thus, the advent of AI in the QA process has resulted in reduced Testing Time by automating repetitive tasks, Improved Accuracy by eliminating human error and scale testing across various devices and environments, and ultimately increased test coverage without adding any overhead on resources.

AI is bringing a significant transformation to QA by enabling deeper insights. This will only continue to improve as AI technology advances.

Integrating Artificial Intelligence (AI) in Quality Assurance (QA) is reimagining the software devel...

At its core, evolution isn’t just a passing phase; it’s an ongoing process. Every being and concept, from nature to technology, evolves through adaptation, learning, and constant refinement. Artificial intelligence was once purely "artificial"—a distant concept. Today, it has merged with human ingenuity, transforming not only businesses but also daily lives.

At Techjays, we don't just build AI solutions; we revolutionize how they impact real lives. Technology, after all, is a double-edged sword—capable of being a boon or a bane, depending on its application. Our mission is to leverage AI to foster a better world, one where humans and artificial intelligence work in harmony to enhance our collective future.

For the average person, AI is not about code or algorithms; it’s about convenience, insight, and simplified solutions. Take a look around: once, mobile phones were a luxury—today, they’re powered by AI, offering us personal assistance at our fingertips. Whether it’s a quick reminder from Siri, a recommended show on Netflix, or apps that manage personal finances, health, or even grammar, AI has seamlessly integrated into our routines. It’s moved from a “good to have” to a “must-have,” improving lifestyles in ways we often take for granted.

As individuals recognize the value of AI in daily life, businesses seek AI-powered solutions to address their most pressing challenges. This is where Techjays comes in. We aim to create straightforward, impactful AI solutions that enhance user experiences, drive efficiency, and solve complex business problems. We've done it before, and we’re committed to continuous, incremental improvement.

Stay tuned as we share insights, stories, and innovations from Techjays, illustrating how we harness the power of AI to shape a smarter, more connected world.

At its core, evolution isn’t just a passing phase; it’s an ongoing process. Every being and concept, from nature to technology, evolves through adaptation, learning, and constant refinement. Artificial intelligence was once purely "artificial"—a distant concept. Today, it has merged with human ingenuity, transforming not only businesses but also daily lives.

In the rapidly evolving world of AI, every update opens new doors to innovation and efficiency. Anthropic’s latest release of Claude 3.5 Sonnet and Claude 3.5 Haiku models is no exception. These updates introduce groundbreaking enhancements that not only refine AI interactions but also broaden the scope of what AI development services can achieve, especially in automation and quality assurance.

The release also includes a beta feature that enables Claude to interact with computers the way humans do - by looking at the screen, moving a cursor, clicking, and typing text. This adds a whole new layer of functionality that we’ve been anticipating: AI that doesn’t just analyze or compute but also acts on its analysis in real time.

The Claude 3.5 Sonnet and Claude 3.5 Haiku models are designed to serve different use cases while offering enhanced capabilities:

Together, these models provide a versatile set of tools for developers, businesses, and AI enthusiasts. Whether your use case demands detailed insights or quick actions, Claude 3.5 has you covered.

Arguably, the most exciting development is the Computer Use API, which represents a significant leap in AI capability. This feature allows Claude to interact with computer interfaces, mimicking the actions humans take while using a computer. Through this API, Claude can now:

This new functionality essentially allows developers to direct Claude to perform tasks as if it were a human user sitting in front of a computer. The applications for this are vast. Here are a few examples:

This advancement in AI interaction aligns with our vision at Techjays—AI that doesn’t just process data but can act upon it, opening up endless possibilities for automation and operational efficiency.

Check this interesting video by Anthropic on X.com

Reflecting on these developments, I can confidently say we are closer than ever to AI-driven quality assurance (QA). Here’s why this matters:

As we explore these incredible tools at Techjays, I’m excited to see where this will take us. With the power of Claude 3.5’s Sonnet and Haiku models, combined with its newfound ability to interact with computers, we’re standing on the brink of a new era in AI-driven automation and quality assurance.

Stay tuned for more updates as we dive deeper into the potential of these tools.

In the rapidly evolving world of AI, every update opens new doors to innovation and efficiency.

Till a decade ago, especially in Hollywood sci-fi movies, Artificial Intelligence or AI represented villains from distant future – which is no longer the case on either front. AI is no longer a futuristic concept, and neither is it our nemesis – in fact, it is turning into one of our biggest allies of the times.

.png)

Apart from its impact in other domains, it's already transforming the way software development is happening today. It certainly makes development faster and with few errors. But it doesn’t mean to replace human creativity. Whether on your journey to build an app or manage complex cloud systems, integrating AI into your software development process will help you churn out a more robust product. From helping developers write code to applications testing and managing projects, let's dive in and see how deep the potential of AI digs into the software development landscape.

And off the top of the list, of course, is the groundbreaking level achieved in code generation.

Automating Code Generation and Assistance

To start with, let’s take GitHub’s Copilot. When powered in association with OpenAI’s Codex, today it can generate entire code snippets if fed with simple human instructions. Thus, for the average coder, software development no longer means indulging in routine, mundane tasks. Now it can mean indulging in more value-adding activities.

Along with this, AI can also help in refactoring existing code and ensure that best practices from around the globe are followed correctly. Integrating AI with Integrated Development Environments (IDEs) can trigger real-time suggestions and minimize errors.

Another instance is Google's TensorFlow AutoML. It actually capacitates developers to build machine-learning models. Similar is Microsoft’s Azure AI which enables coders to integrate AI functionalities into apps.

The propensity of AI’s accessibility has made it available to small teams and is usable even by teams who have no specialized knowledge of AI.

Enhance your coding process with the help of AI development services for automated code support which helps in speeding the whole development process and minimizing the chances of human errors in coding.

Testing and Bug Detection – on the wings of AI

Of all the groundbreaking that AI did in coding, nothing can match that of the AI-driven testing tools – because testing before the advent of AI, was one of the most time-consuming processes in the software development sequence.

AI-driven testing tools have now revolutionized this aspect with the capability to simulate hundreds of test cases simultaneously. On top of that, it can perform stress testing - at a scale no team of human testers, however big,

It can also do error prediction on previous and historical data and can even automatically rectify them.

AI-driven testing tools have now revolutionized this aspect with the capability to simulate hundreds of test cases simultaneously. On top of that, it can perform stress testing - at a scale no team of human testers, however big,

It can also do error prediction on previous and historical data and can even automatically rectify them.

This is where we meet SmartBear and Functionize – both being AI tools that can do regression testing, unit testing, and functional testing automatically helping developers pre-emptively address bugs in the code. Another instance is Google Cloud's AI, which in large-scale cloud environments, automatically detects security issues and ineptitudes.

Project Management!

As you may know, software creation involves not just coding – it involves many other coordination and management activities too, which broadly comes under project management.

In such an environment, AI can easily help assign tasks and anticipate potential delays and resource bottlenecks by analyzing past projects and workflows. Past project management platforms like Jira and Monday.com today integrate AI into their bundle, capacitating them to suggest more efficient workflows. AI can analyze team performance records and the complexity of coding involved in particular projects and then predict delivery times with great accuracy. These real-time feedback and risk analysis can enable managers to make timely, informed decisions.

Bolstering Security and smoothening Maintenance

Unlik conventional security defenses, AI-powered security tools such as Darktraceand CrowdStrike can detect and absolve security breaches in real time. They rely on anomaly detection by extrapolating from existing data. Security is one of the most important aspects of software development, especially these days with the amount of emerging cyber threats.

Similarly, maintenance checks are also being taken up by AI. It can indicate when components need updates or maintenance - a special benefit for DevOps sections. It can also recommend patches by monitoring code performance, reducing downtime, and keeping applications up-to-date.

Building Intelligent and Adaptive Applications

It is not only the processes of software development that AI changed – it has changed the nature of applications itself. Intelligent and responsive applications are the new order that provides users with highly personalized experiences. AI-powered recommendation engines tailor content to individual users based on their past behavior, especially in platforms like YouTube and Netflix, where recommendation engines are becoming highly intelligent as each day passes.

On top of it, these applications can interact with users also now, thanks to the rise of natural language processing (NLP). Chatbots and virtual assistants are day-by-day becoming more human-like. OpenAI's GPT and Google's BERT can comprehend and respond to user queries with the flexibility of human interactions.

Continuous Integration/Continuous Delivery (CI/CD)

AI has literally transformed the CI/CD pipeline. Today, AI-powered analytics can quite accurately predict potential deployment failures, automate manual tasks, and consequently enable continuous integration and delivery. Tools like CircleCI and Jenkinstoday ensure smoother and faster product releases by identifying key areas for deployment.

These systems also suggest improvements based on past deployment data, automatically making the CI/CD process more resilient and flexible to volatile conditions.

Cloud Management and DevOps

Infrastructure provisioning and scaling can now be done in the best way possible, thanks to AI-based tools like AWS Lambda, Azure AI, and Google Cloud’s AI. Thus judicial management of cloud resources is no longer a hardship, especially as it is automated. This, in turn, can facilitate predictive autoscaling, optimizing resource allocation based on real-time data. During traffic spikes and other unpredictable events, this can prove to be an invaluable intervention, ensuring high performance, reduced costs, and sturdy system reliability. Similarly, AI-enabled DevOps tools offer automated troubleshooting.

The New Era of Software Development – already a year old!

AI is already making a profound difference - by automating regular tasks, bettering code quality, scaling up and enhancing testing quality, and making responsive, intelligent applications possible. It is changing how software is built and deployed.

And more importantly, all these tools and resources are becoming increasingly more accessible and AI today is a key partner of coders in innovation.

Infrastructure provisioning and scaling can now be done in the best way possible, thanks to AI-based tools like AWS Lambda, Azure AI, and Google Cloud’s AI. Thus judicial management of cloud resources is no longer a hardship, especially as it is automated. This, in turn, can facilitate predictive autoscaling, optimizing resource allocation based on real-time data. During traffic spikes and other unpredictable events, this can prove to be an invaluable intervention, ensuring high performance, reduced costs, and sturdy system reliability. Similarly, AI-enabled DevOps tools offer automated troubleshooting.

Till a decade ago, especially in Hollywood sci-fi movies, Artificial Intelligence or AI represented villains from distant future – which is no longer the case on either front. AI is no longer a futuristic concept, and neither is it our nemesis – in fact, it is turning into one of our biggest allies of the times.

AI has revolutionized business processes – there’s no arguing that. The initial trend by these businesses was to adopt existing, pre-designed AI models for their processes.

But using such off-the-shelf, existing AI solutions, even though they can offer quick benefits, often lack the specificity to address unique challenges that individual businesses face.

Custom AI solutions, on the other hand, come up with solutions tailored specifically to the business needs leading to a higher return on investment (ROI).

Instead of adopting a one-size-fits-all approach, custom AI solutions are systems designed exclusively for a business's unique operations. Also while training such systems with data, the customized systems consider not generic data, but data relevant to the particular business processes, and context.

Such models are ideally developed in tie-ups with AI experts, data scientists, and domain-specific professionals who have a deep understanding of the industry and the firms’ needs. Such custom AI models can range from a personalized recommendation engine for an e-commerce platform to automated financial decision-making in fintech to a sophisticated AI system for predictive maintenance in manufacturing.

The primary aim and advantage of custom AI solutions is their ability to be relevant to specific business problems and thus provide accurate insights. Off-the-shelf AI models, on the other hand, may not fully understand the nuances of a particular industry or business as it is designed for a broad audience.

But in a custom AI model, one can train the model on the company’s data and design it with their own unique goals in mind, leading to more relevant insights.

Off-the-shelf solutions are often rigid in terms of functionality. This is a huge obstacle if the company is planning to expand into new markets, add additional product lines, or tackle different and new operational challenges.

A custom AI model, on the other hand, is designed from scratch; it can be continuously adapted and scaled to accommodate new challenges, and changes in the domain, and train on new data for new business goals.

Almost every factor of performance of an AI model depends on the data it is trained on. Off-the-shelf models are almost always pre-trained on generic datasets, usually not relevant to specific industries. However, custom solutions created from scratch are often trained on a business's proprietary data, allowing them to make better insights and recommendations.

Thus custom AI solutions are a perfect initiative for businesses that already have access to large amounts of data as part of their business processes, like customer behavior, various operational statistics, or market trends.

In today’s competition, possessing a custom AI solution can be a game-changer. While off-the-shelf models are available to everyone, custom AI models offer solutions unique to your process and business. The insights thus obtained can provide an edge over competitors who don’t possess powerful custom models.